How to capture video in iOS 9 and Swift 2.0

Transcript – How to capture video in iOS 9 and Swift 2.0

After the camera tutorial, many of you said that’s great, but can you show code for the video camera? How to record and play video in Swift next!

Shooting videos in Swift is almost as easy as taking photos! The real catch is what to do with the video when we’re finished. In this tutorial, we’re going to create a video, save it to the photo gallery and our documents folder, and finally we’ll play the last video we recorded.

Using the video camera can be summed up in a few steps. First we need to see if our app has access to the camera. Then we need to check if the video capture mode is available. What we’re doing here is checking to see if we can do video. Next we initialize an image picker controller with some flags, and present the camera view controller to the user. From there we’ll need to check to see if we got a video or if the user selected cancel. If we got a video or if the user cancels, we’re done.

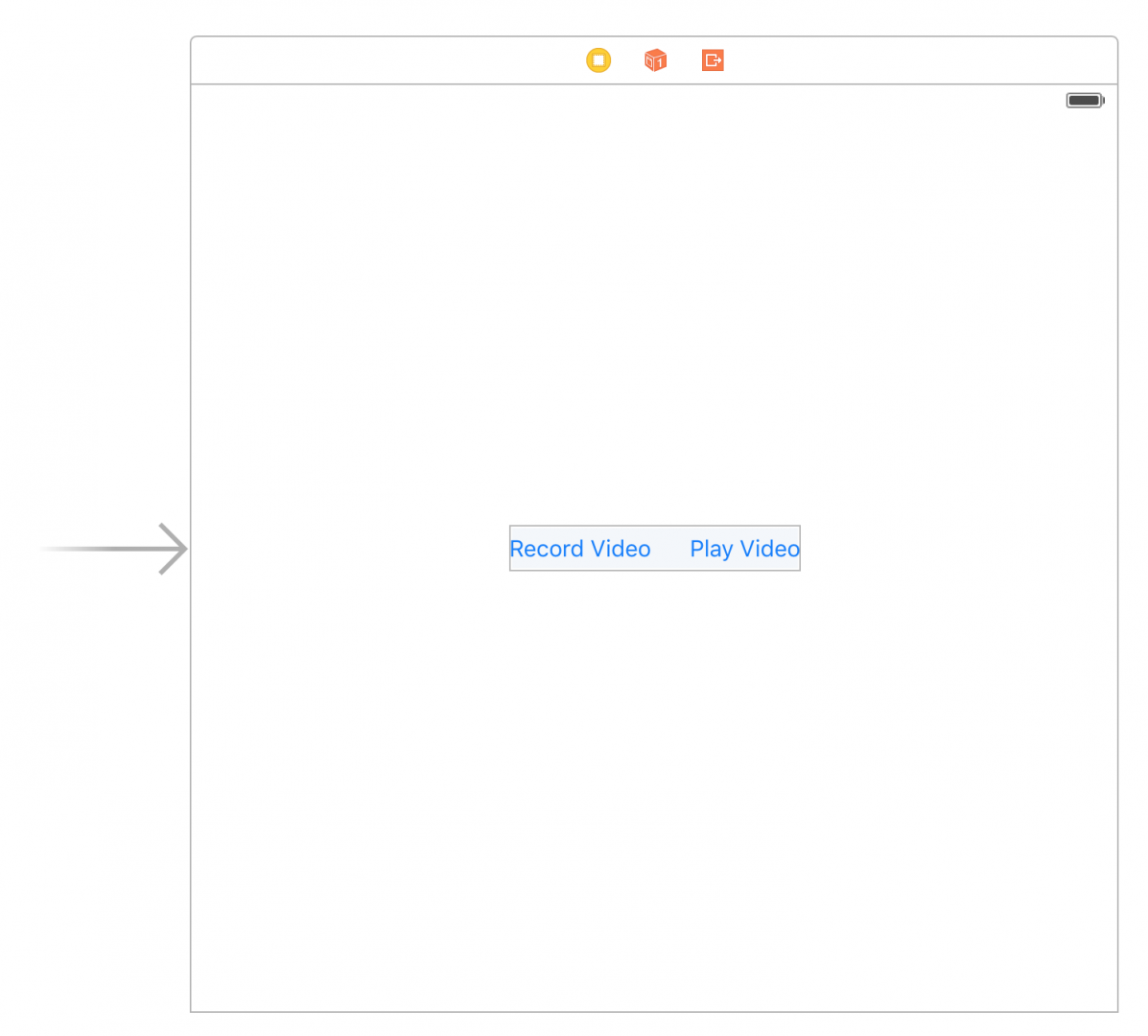

Here’s our app. There’s two buttons. One for recording video, and the other plays the last video recorded. It’s that simple. If we record two videos, the button will only play the second video. Once we see how it’s done, we can handle videos any way we like in our app.

We are using the UIImagePickerController to capture video. For most apps, this works fine. We’ll create one for the entire app at the beginning of our view controller.

Let’s start with the IBAction for recording a video. The first thing we’ll do is check to see if we have a camera available. If we don’t, this most likely means our app doesn’t have access to the camera. We can provide access in the settings. It should ask us for permission the first time we run the app.

// Record a video

@IBAction func recordVideo(sender: AnyObject) {

if (UIImagePickerController.isSourceTypeAvailable(.Camera)) {

if UIImagePickerController.availableCaptureModesForCameraDevice(.Rear) != nil {

imagePicker.sourceType = .Camera

imagePicker.mediaTypes = [kUTTypeMovie as String]

imagePicker.allowsEditing = false

imagePicker.delegate = self

presentViewController(imagePicker, animated: true, completion: {})

} else {

postAlert("Rear camera doesn't exist", message: "Application cannot access the camera.")

}

} else {

postAlert("Camera inaccessable", message: "Application cannot access the camera.")

}

}

Assuming we have access, we’ll check to see if there is a rear camera. If there is, we have a video camera. The video camera was introduced a long time ago on phones that won’t support iOS 9, so checking this might be overkill. From there, we set up the image picker.

The source type is the camera. If we just wanted to load a video from the library, we could use PhotoLibrary. We’ll set the media types to kUTTypeMovie. This constant is in MobileCoreServies. This is pretty much the big difference from taking pictures. We just provide a different media type.

We’ll also set the delegate to self. In future apps, we probably should make the delegate a separate class, but this is just a demo so we’ll make the view controller the delegate. To do that, we need to make sure the view controller implements the protocols.

These are UIImagePickerControllerDelegate and UINavigationControllerDelegate. We don’t need to add methods for UINavigationControllerDelegate, but we do need to add a few methods for the UIImagePickerControllerDelegate. These are for the our two camera user events – got video and user canceled. We then present the view controller, and capture a video. The next task we have to address is what to do with the video when we’re done. Let’s add methods for our delegate.

At this point the user has recorded a video, but the UIImagePickerController hasn’t told us it’s finished. It does this in the didFinishPickingMediaWithInfo delegate method. Let’s take a look at that.

The UIImagePickerController doesn’t pass the whole video back to our method. That could kill memory, especially since the new cameras do 4K. Creating videos in Swift is a bit different than photos. When UIImagePickerController is finished, it passes back a URL to the video.

Our first thought might be to save the URL and be done, but we won’t get access to the video after this point. Our app isn’t part of the same app group as the photo library. This delegate method is called from the UIImagePickerController, not us. We need to save the video to our app’s document directory.

If we have a URL, we’ll first save the video to the photo album. This is just to show how we’d save the video to the photo album. When it’s done, we’ll call a selector to perform any tasks we want. We’ll use it to check for errors.

// Finished recording a video

func imagePickerController(picker: UIImagePickerController, didFinishPickingMediaWithInfo info: [String : AnyObject]) {

print("Got a video")

if let pickedVideo:NSURL = (info[UIImagePickerControllerMediaURL] as? NSURL) {

// Save video to the main photo album

let selectorToCall = Selector("videoWasSavedSuccessfully:didFinishSavingWithError:context:")

UISaveVideoAtPathToSavedPhotosAlbum(pickedVideo.relativePath!, self, selectorToCall, nil)

// Save the video to the app directory so we can play it later

let videoData = NSData(contentsOfURL: pickedVideo)

let paths = NSSearchPathForDirectoriesInDomains(

NSSearchPathDirectory.DocumentDirectory, NSSearchPathDomainMask.UserDomainMask, true)

let documentsDirectory: AnyObject = paths[0]

let dataPath = documentsDirectory.stringByAppendingPathComponent(saveFileName)

videoData?.writeToFile(dataPath, atomically: false)

self.dismissViewControllerAnimated(true, completion: nil)

}

imagePicker.dismissViewControllerAnimated(true, completion: {

// Anything you want to happen when the user saves an video

})

}

Next we want to save the video to the app’s document directory. We’ll take the video URL, find the document directory, and write it to a file. In this example, the file name is hardcoded as test.mp4, but since it our app’s directory we can call it anything we like.

Finally we dismiss the UIImagePickerController, and our video is saved. Now let’s handle the play button’s IBAction method.

To play the video, we’ll use AVKit and AVFoundation. You might find other ways to play videos on the net, but the old way is deprecated. Apple wants us to use AVKit now.

First need to find the location we saved it. We’ll build the document URL the same way we did before.

Once we have the path built, we’ll create a video asset using the url. An AVAsset is just a class used to represent either sounds or videos. Next we need a AVPlayerItem. The AVPlayerItem is a class that manages the state of the AVAsset we’re playing. Like pausing, or skipping to a particular time in the AVAsset. We create this and pass it to an AVPlayer.

// Record a video

@IBAction func recordVideo(sender: AnyObject) {

if (UIImagePickerController.isSourceTypeAvailable(.Camera)) {

if UIImagePickerController.availableCaptureModesForCameraDevice(.Rear) != nil {

imagePicker.sourceType = .Camera

imagePicker.mediaTypes = [kUTTypeMovie as String]

imagePicker.allowsEditing = false

imagePicker.delegate = self

presentViewController(imagePicker, animated: true, completion: {})

} else {

postAlert("Rear camera doesn't exist", message: "Application cannot access the camera.")

}

} else {

postAlert("Camera inaccessable", message: "Application cannot access the camera.")

}

}

The AVPlayer is a class that plays our videos, and we pass it the AVPlayerItem. Finally we pass the player to a view controller that uses the player. It seems like alot of classes to just play a video, but the API is keeping a clear separation of concerns between the classes. It’s a good thing.

Finally we present the view controller, and ask it to play the video. And we’re done! The only hard parts to to creating video in iOS are knowing what to do with the video, and then navigating all the classes in AVKit and AVFoundation. So now we can add video to our apps!

Thanks for watching! If you have any questions let me know in the comments or on DeegeU.com. New tutorials come out every week, so subscribe. You don’t want to miss a video! Liking the video lets me know what I’m doing right, so if you liked the video… Like it, love it, share it!

And with that, I’ll see you in the next tutorial!

Tools Used

- Java

- NetBeans

Media Credits

All media created and owned by DJ Spiess unless listed below.

- No infringement intended

Get the code

The source code for “How to capture video in iOS 9 and Swift 2.0” can be found on Github. If you have Git installed on your system, you can clone the repository by issuing the following command:

git clone https://github.com/deege/deegeu-ios-swift-video-camera

Go to the Support > Getting the Code page for more help.

If you find any errors in the code, feel free to let me know or issue a pull request in Git.

Don’t miss another video!

New videos come out every week. Make sure you subscribe!

Comments

DJ Spiess

Your personal instructor

My name is DJ Spiess and I’m a developer with a Masters degree in Computer Science working in Colorado, USA. I primarily work with Java server applications. I started programming as a kid in the 1980s, and I’ve programmed professionally since 1996. My main focus are REST APIs, large-scale data, and mobile development. The last six years I’ve worked on large National Science Foundation projects. You can read more about my development experience on my LinkedIn account.